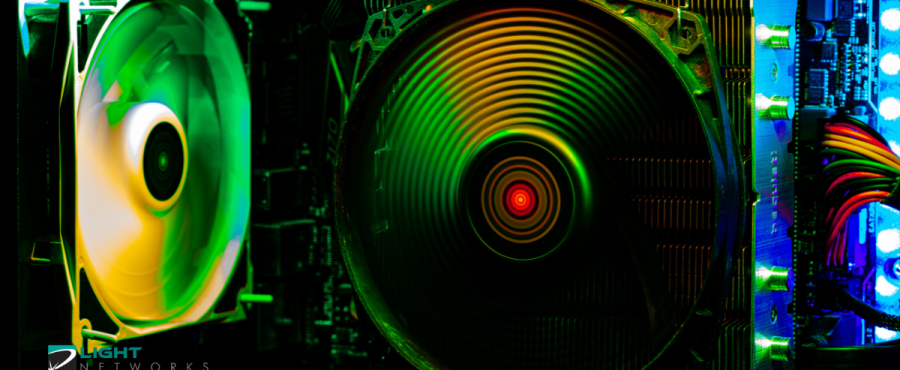

AMD vs. NVIDIA GPU

Some companies will compare an AMD vs. NVIDIA GPU and focus on surface-level specs, but technology leaders are looking at something deeper. Real performance is not only about raw frame rates or clock speeds. It is about how each architecture handles parallel workloads, cloud integration, and the rising importance of AI acceleration in data-driven environments.

At LightWave Networks, we see this firsthand when organizations deploy GPU workloads inside data centers, hybrid environments, and private cloud infrastructures. AMD and NVIDIA remain the two dominant forces in the GPU industry.

A GPU, or graphics processing unit, is built to perform large numbers of calculations at once, which increases raw computing power for parallel workloads. Instead of running tasks sequentially, like most CPUs, a GPU processes data in parallel. This structure makes GPUs essential for high-performance workloads such as machine learning, video rendering, edge computing, automation, and modern gaming.

Choosing between the two is not only about achieving high-speed results. It is a long-term infrastructure choice that determines how workloads scale, how systems integrate with the cloud, and how performance grows over time.

What CTOs and CIOs Evaluate in GPUs

The real question for technology leaders is not who wins the benchmarks in a lab. It is, “Which platform offers the stronger return on investment when deployed across business environments?” A modern AMD vs. NVIDIA GPU comparison must answer questions that go beyond performance metrics.

CTOs and CIOs are asking:

- How does each architecture scale in AI and data acceleration?

- Which ecosystem provides better cloud compatibility?

- Which GPUs deliver the best energy efficiency per watt at scale?

- How do vendor lock-ins affect long-term operational freedom?

These questions reflect a strategic mindset. Most enterprise GPU purchases are not one-off hardware events. They are part of five to ten-year hybrid buildouts across AI training, virtualization, edge deployments, and private cloud infrastructures.

Architecture: RDNA vs. CUDA-Based Designs

What separates an AMD vs. NVIDIA GPU is not only performance output but architectural intent. AMD’s current RDNA and CDNA architectures emphasize open development, modular scalability, and strong performance per dollar across computer workloads. These designs aim to give enterprises flexibility without relying on proprietary vendor ecosystems, while maximizing memory bandwidth for workloads that push large data streams through the processor.

NVIDIA’s architecture approaches the same mission through a tightly integrated stack. CUDA, Tensor Cores, and their specialized libraries form a vertically cohesive ecosystem. This provides outstanding performance acceleration for AI, machine learning, and scientific computing. However, it also introduces long-term questions around vendor lock-in.

Where AMD aligns with open standards like ROCm, HIP, and OpenCL, NVIDIA prioritizes proprietary optimization paths. The trade-off is clear. AMD promotes adaptability across distributed environments, while NVIDIA offers unmatched acceleration in unified proprietary ecosystems.

In practice, the difference can influence how CIOs design infrastructure lifecycles and how quickly teams can pivot as new compute needs emerge.

Enterprise Deployment Considerations

Technology leaders comparing an AMD vs. NVIDIA GPU are rarely asking which card is faster on paper. They are asking how each platform performs in real-world deployments across remote clusters, high-density racks, financial modeling systems, LLM training, and real-time automation.

Decision makers tend to evaluate three areas of enterprise deployment.

Cross-Platform Compatibility

AMD offers more flexibility across Linux distributions, open-source workflows, and cross-platform hardware stacks. NVIDIA delivers deeper integration with proprietary AI frameworks and specialized cloud accelerators.

Long-Term Scalability

AMD’s open software path can reduce future switching costs. NVIDIA’s tightly optimized stack can generate exceptional performance, but often increases reliance on its ecosystem as time goes on.

Cost and Power Efficiency

AMD often provides favorable pricing per teraflop and predictable scaling of compute per watt. NVIDIA delivers extremely high acceleration efficiency under heavy AI workloads, particularly where Tensor Core utilization is strong.

Each choice has strategic cost implications. CTOs are not choosing a single GPU. They are choosing a future infrastructure model.

Pricing Dynamics and Total Cost of Ownership

Raw MSRP does not tell the story. Pricing for enterprise GPUs includes licensing, integration, optimization, vendor support, and cloud access fees. AMD’s pricing is more predictable due to fewer proprietary software dependencies. NVIDIA’s ROI may still be higher when an organization demands specialized AI acceleration at scale.

The right investment depends on workload profiles. Startups focusing on cloud flexibility and distributed training often favor AMD for budget versatility. Meanwhile, enterprises focused on deep learning acceleration typically lean toward NVIDIA to maximize performance per training cycle.

Target Workloads: Where Each GPU Excels

Different GPUs excel at different enterprise needs. The comparison is less about better or worse and more about alignment.

AMD Strengths

Strong value per dollar, scalable open-source development workflows, attractive energy efficiency across distributed compute, and competitive performance in virtualization, content creation, and containerized Linux environments.

NVIDIA Strengths

Industry-leading acceleration for AI, deep learning, and high-performance scientific computing, driven by CUDA, Tensor Core optimization, and exclusive libraries that deliver huge performance gains when fully utilized.

In short, an AMD vs. NVIDIA GPU decision becomes a workload decision. CIOs choose based on whether the strategy prioritizes open architecture flexibility or maximum proprietary acceleration.

Cloud Ecosystem Alignment

As organizations adopt hybrid and multi-cloud architectures, GPU decisions now affect cloud automation, container orchestration, and bursting capability. AMD aligns strongly with providers that support open platforms across containerized cloud stacks and broader cloud computing environments. NVIDIA strengthens its position with highly tuned cloud accelerators and managed AI services.

The question becomes less “Which GPU is more powerful?” and more “Which platform prepares our infrastructure for cloud-native acceleration over the next five to ten years?”

Why Businesses Trust LightWave Networks for GPU Workloads

LightWave Networks helps enterprises make GPU decisions based on measurable strategic value. Whether a business chooses AMD, NVIDIA, or a hybrid strategy, LightWave evaluates workload profiles, cluster scaling requirements, and cloud alignment to guide long-term infrastructure decisions.

By providing hosting, colocation, network engineering, and GPU-ready data center environments, LightWave ensures organizations maximize performance without overspending on vendor ecosystems or licensing models. Clients gain predictable compute bandwidth, cost-efficient GPU deployment, and cross-platform flexibility designed around their exact workloads.

Future-Ready GPU Strategy

An AMD vs. NVIDIA GPU decision shapes compute infrastructure for years. The right answer depends on how an organization plans to scale, not which GPU looks superior on paper. Open optimization, proprietary acceleration, cloud readiness, and vendor ecosystem risk all shape the outcome.

Technology leaders who choose based on workload strategy build systems that outperform trends instead of chasing them. The goal is not to buy a GPU. It is to build a platform that evolves with data, automation, and AI demands.

Frequently Asked Questions About GPUs

What does GPU stand for?

GPU stands for graphics processing unit. It is a processor engineered to compute many tasks simultaneously, making it ideal for workloads like AI, rendering, and parallel computing.

What is the difference between a CPU and a GPU?

A central processing unit (CPU) is designed to handle many kinds of tasks one after another. It manages the operating system, runs applications, and coordinates everything the computer system does. CPUs have only a few cores, but each core is powerful and flexible, which makes them ideal for logic, decision making, and step-by-step instructions.

A GPU is designed to handle one type of task thousands of times at the same time. Instead of a few powerful cores, it has many smaller cores that work together. This structure makes it ideal for parallel workloads such as rendering graphics, training AI models, processing large datasets, and running complex simulations.

Both are processors, but they are built for different purposes. The CPU focuses on general decision-based computing, while the GPU accelerates massive parallel workloads, including but not limited to graphics.

What is a dedicated GPU?

A dedicated GPU is a processor that operates independently of the system’s integrated graphics. It provides significantly higher performance for applications that require powerful parallel computers.

What does overclocking a GPU do?

Overclocking a GPU means manually increasing its operating clock speed beyond the manufacturer’s default settings. This forces the GPU to run faster than intended. The process can deliver higher performance, but it also generates more heat, draws more power, and may reduce stability if cooling or power limits are not managed correctly.

What does undervolting a GPU do?

Undervolting reduces the power delivered to a GPU to lower heat and increase efficiency. When done correctly, it maintains strong performance while reducing thermal load.

What is the best AMD GPU?

When asking about the best AMD GPU, it depends on the workload you are doing with it. AMD’s workstation and data center GPUs excel in open development and scalable parallel workloads, while top consumer models compete in rendering and gaming performance.

What is NVIDIA known for?

NVIDIA is known for accelerated AI performance, enterprise compute, and specialized CUDA-based software stacks that dominate deep learning, scientific computing, video editing, and automation.

Choosing the Right GPU Strategy for Your Business

If your business is building infrastructure around modern GPU workloads, LightWave Networks can help you design systems that scale with AI, cloud acceleration, and demanding parallel computing. Our team evaluates AMD, NVIDIA, and hybrid deployments based on measurable ROI, energy efficiency, and workload performance. Connect with LightWave Networks today to build a future-ready architecture and request a free estimate.

Related Readings:

What Are the Benefits of Managed Hosting vs. Colocation?